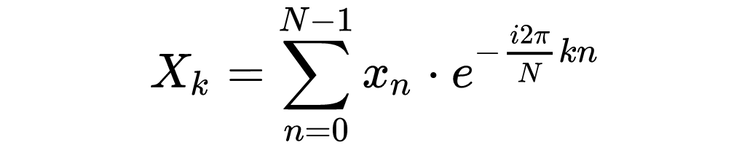

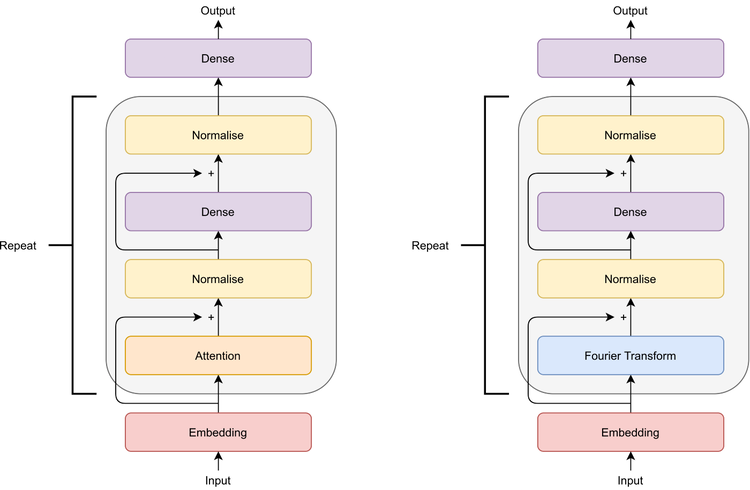

All else being equal, there are two computational advantages to FTs vs. attention.

-

FTs can be calculated on graphics processing units (GPUs) fairly efficiently thanks to the Fast Fourier transform (FFT) algorithm. This allows the quadratic algorithm written above to run in O(n log(n)) time using a divide-and-conquer approach. Attention cannot be accelerated in this way and thus has undesirable scaling properties of the orderO(n²).

-

FTs are not parameterised, meaning that we can reduce a model’s memory footprint by replacing attention with FTs, as we do not need to store key, query and value matrices.

Results

The researchers reported that FNet trained faster (80% on GPUs, 70% on TPUs), and with much higher stability than BERT. The peak performance as measured by accuracy on the GLUE benchmark was 92% as good as BERT.

They also proposed a hybrid architecture that replaced all but the last two attention layers in BERT with FT layers, which reached 97% relative accuracy, with only a minor penalty in training time and stability. This is a highly encouraging result, seemingly the undesirable scaling properties of transformers can be avoided if we employ more tactful approaches to token mixing. This is especially important for resource constrained environments.