During our recent presentation at the Eyes-Off data summit in Dublin, we were asked a question about FHE. The question went something like this:

“If you can evaluate encrypted information, couldn’t that property be used to evaluate the content of a message? And doesn’t that property break the security of encryption”?

The answer varies, depending on the security model. In the “classic” symmetric example of FHE, we can outsource the processing of some data to a cloud that we might not otherwise trust.

In this model, we hold the decryption key and send no supplementary information back to the server. The server can only perform the processing and thus learns nothing (and can learn nothing) about the outcome.

However, how can we be sure that the server has run the right process? What if someone swapped out the intended algorithm with something that could analyse something different about the information we supplied to it?

In the symmetric case, we would receive an incorrect result from the calculation. Guarding against that possibility is important for the symmetric-key case.

However, this is especially important in the context for some applications, in which the use-model might involve some third party receiving information about the outcome of the computation. This is of particular interest in models of FHE that feature multi-party computing. In that case, we want to be certain that only the agreed-upon process was run and that the third party can only learn what we are comfortable with them knowing.

The above invokes the notion of an attestation, a proof that can be used to guarantees that a specific operation has been performed, even when we can’t directly see that process ourselves.

Attestation is a common theme in another area of confidential computing, specifically with respect to trusted execution environments, or TEEs. A TEE is a hardware-based solution to the problem of trusted computing; not only is the hardware itself often physically isolated from the rest of a computing system and inaccessible even to system administrators, but everything that happens inside the TEE is subjected to additional checks such as cryptographic validation, which can be used to attest to the fact that only authorised code is run.

Notionally, this provides some additional security. However, what if the entity that authorises the code and the entity that submits the data aren’t the same? How can we design a system that ensures trust across all parties, including the users of a service?

Ultimately, this is a very interesting question. In this article, we expand on the answer that we gave at the conference. There, we selected an example from a subject that has been in the news a lot as of late; the question of how we prevent end-to-end services being used to share child abuse material.

We’ll start by filling in some of the background to this debate, and then put forwards a toy model in which we apply FHE to the evaluation of messages that are sent over an E2EE service. Here, we want the server to be able to learn something about an image, but we don’t want to compromise the overall security of the system. In the process, we demonstrate the basic idea of how attestation would be required to ensure the validity of the computation, and then discuss what would be required for the deployment of such a system at scale.

Moderating the internet is hard work. Any platform that allows users to communicate and share content faces a slew of difficulties when it comes to deciding what’s allowable on their service.

Be it contentious yet legal free speech, cyberbullying, or other sources of potentially harm (such as pro-anorexia communities), there are many aspects of running an online service that can run afoul of legal and social conditions. At the same time, services are also expected to provide and protect free expression, and where necessary to shield users from harm.

Achieving this balance is not trivial. The scope and reach of the internet and the scale at which some of the biggest platforms operate ensures many companies find themselves in the crosshairs of regulators around the world, especially those platforms dedicated to social media. The extent to which these platforms are expected to censor or allow given forms of speech often devolves into a legal and political battleground.

However, one rare point of universal agreement is the prohibition of child sexual abuse material, or CSAM for short. Combating the sharing of CSAM over the internet is rightly a priority for both law enforcement and tech companies the world over.

Despite the shared desire to tackle this problem, the question of how to achieve this is one of the most fraught topics in an already complicated field. And as of late, the spread of end-to-end encryption (E2EE) has further soured the relationships between regulators and the tech sector.

End-to-end encryption is a critical technology that ensures the security and privacy of messages you send over the internet. For example, when you communicate with your bank over the internet, neither you nor the bank want people to be able to see the content of those messages. If those messages are intercepted, we want them to be unreadable to anyone who shouldn’t have access. You also want to be able to verify that it is indeed the bank that you’re talking to!

Encryption allows us to scramble these messages in such a way that it’s nearly impossible to unscramble them without knowing a specific piece of information. Thanks to what is known as public-key encryption and message authentication techniques, it’s possible to safely exchange all the information needed to protect your communications, even with people you haven’t met in person. This allows us to securely communicate with people and services all over the globe.

Under end-to-end encryption, only the sender and receiver of the messages can unscramble the messages that they send to each other. This is essential to the security and functionality of the modern internet; without it, many of the utilities that we take for granted such as banking apps, e-commerce and private messaging platforms would be too vulnerable to function correctly.

However, when it comes to the fight against CSAM, end-to-end encryption poses a problem. In brief, the difficulty of detecting CSAM on encrypted messaging apps such as WhatsApp is that E2EE ensures that there is no point in the messaging pipeline where the contents of the message can be intercepted and examined.

That’s an obviously a necessary property for protecting communications. However, the argument of regulators is that the nature of this protection offers no distinction between cybercriminals and other malicious parties, who have no business reading anybody’s messages, and law enforcement, who might have legitimate reasons to examine a message. And when it comes to legitimate reasons, intercepting and eliminating child abuse is the ultimate argument.

Of course, encryption and privacy have always been a significant battleground in the tech space, and even within governments there are often dissenting views. For example, in the UK, the Home Office has pushed back strongly against Meta’s plans to introduce end-to-end encryption to Facebook messenger, while the Information Commissioner’s Office (itself a UK government body) takes the view that E2EE has a proportionally greater effect in protecting children by preventing criminals and abusers from accessing their messages.

Proposals that try to find a way around this problem have previously been driven by suggestions on circumventing the encryption itself. That could involve weakening or removing the core cryptography by creating “back-doors” for law enforcement use, or storing user’s private decryption keys in a centralised way that is accessible to law enforcement (a “key escrow” method). However, both of these methods introduce unacceptable security flaws in what should be a very secure process, and the dangers of implementing these approaches vastly outweigh the benefits.

To try and find a different way around the difficulties of E2EE, a recent push in this sector has come through the suggested use of so-called “client-side” scanning.

The reasoning and methodology behind client-side scanning goes something like this: If we want to preserve the integrity of end-to-end encryption and evaluate messages for illegal content, we have to check messages before they enter the encrypted pipeline.

Under a regulatory regime in which client-side scanning is mandated, companies such as WhatsApp would have to incorporate an approved technology that can detect CSAM into their platforms. This technology, which would notionally be “accredited” by the relevant regulatory authority, would then inspect user messages before they are encrypted and sent.

However, amongst the many major criticisms levelled against client-side scanning is the argument that a technology that can read and evaluate user messages (and, crucially, report on the content) still damages the protection of end-to-end encryption.

Not only does this capability introduce an attack vector that could be used by malicious parties, but it leaves open the possibility for mission creep, in which authorised parties start scanning for content beyond the original remit under the guise of preventing other crimes.

There’s no easy answer to this criticism, and previous industry-led attempts to apply such technologies have led to bruising clashes between tech companies and privacy advocates. Apple’s own attempt to develop and implement a client-side scanning capability for CSAM in iCloud met with such resistance that the plans were shelved after just a couple of months.

Besides the core technical aspect of encryption, which is understood to be essential to securing the modern web, tech companies are well aware of the damage that failing to respect user privacy can do to their business, and are now much more cautious about implementing measures that could be seen to be infringing on user’s rights.

However, this is now putting them on a collision course with regulators and law enforcement, who are seeking to solve the issue by leaving tech companies no other choice but to comply or withdraw products and services from the market. While the full scope of this battle is taking place at the supranational level, an interesting test-case for the impact of such legislation looks set to present itself in the form of the UK’s forthcoming Online Safety Bill.

The content and function of this bill exceeds the scope of checking for CSAM alone, but does include provisions for client-side scanning. If fully implemented, the consequences will be extremely wide ranging and would provide an impactful real-world test-case for the impact of such approaches on user privacy.

Despite the possible utility of this bill as a test-case, it’s also uncertain as to how useful the specific outcome will be. So broad-ranging are the mandatory changes that many companies (including WhatsApp and Signal) have indicated that they would simply leave the UK as a market.

But what alternatives are there?

Before we move on to specific technical considerations, we have to ask ourselves what an ideal solution would look like to this problem. With that in place, we can then start to consider if it is possible to build this ideal solution using the technologies that exist in 2023, and then move on to the question of whether this is achievable.

When thinking about this ideal solution, we take the following principles as essential:

Here’s what an ideal solution would look like under these constraints. For this first pass, we’ll describe things in terms of high level abstractions; we won’t get into specific details just yet:

We have two users who want to exchange messages (including images) over an encrypted communications channel.

In the middle of this communications channel, we want to construct a system that can detect any CSAM material, regardless of whether or not this material is encrypted. Even on detecting CSAM, this system cannot view or decrypt the message; it can only communicate the fact that the message contains CSAM.

All communications between the two users pass through this system and are assessed. At the same time, the users receive some proof or verification attached to each message (an attestation) that the system is only able to detect CSAM. This guards against the problem of “mission creep”.

This solution would resolve the following outstanding difficulties:

So with the above in mind, we now ask ourselves: is it possible to design and build such a solution?

Thus far, the hardest question to answer has always been whether it is possible to build a system that can evaluate encrypted information. Under the existing encryption methods used by platforms such as WhatsApp, the answer is no. These encryption methods don’t preserve the underlying structure of the data, so we can’t perform any kind of analysis on the data.

However, the technology that allows for computation over encrypted data does exist. Fully homomorphic encryption, or FHE, is a novel method of encrypting data in such a way that we can perform any computation that we wish to on that data, without needing to decrypt it first.

So with that in mind, let’s take a look at a possible solution in this context.

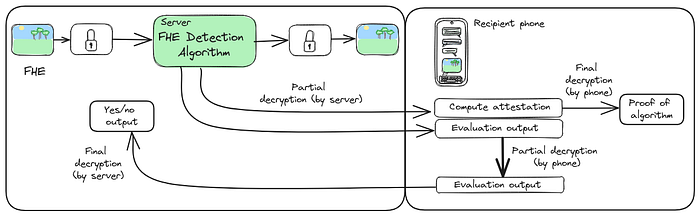

As before, our two users want to securely exchange messages. For the majority of their communications (text etc), they use an existing E2EE technology such as the protocol that underpins Signal and Whatsapp. However, their image messages are encrypted such that we can apply an FHE-based computation to the contents.

Whenever a user sends an image, this image is first sent to the messaging service’s servers for analysis.

Let us assume first that an algorithm exists that can perfectly assess whether an image contains CSAM. This is still a big assumption, but we’ll come back to re-examine this point later.

Under FHE, we can execute this algorithm on the image without having to decrypt the image. Of course, to maintain the guarantee of only evaluating for CSAM, we also have to provide an attestation that we ran only the correct detection algorithm.

With respect to the actual code itself, we could attest to the validity of the algorithm through a TEE-based approach. However, for the end-user, we can also consider a method of attestation that doesn’t involve some trusted third party. In this sense, the attestation is instead a form of zero-knowledge proof by which the party that applies the algorithm is forced to act honestly, as efforts to apply a different algorithm would be detected by the users.

Here’s a toy model that describes what we’re talking about. Executing the detection algorithm on an encrypted image file will also alter the underlying content of the file in some way, and we may be able to use this change as a way of assessing whether a specific, agreed-upon algorithm was applied.

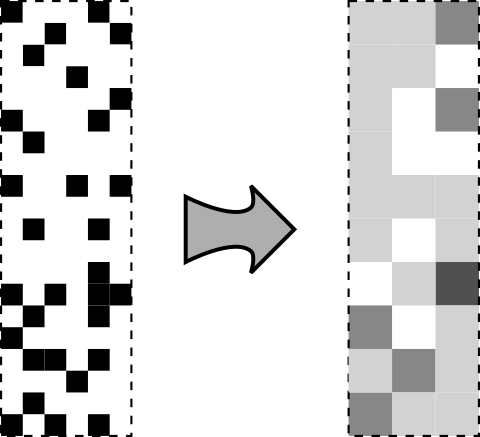

For example, consider a simple “perceptual hashing” approach to detecting whether an image contains a depiction of something that we want to filter. We’ll use a very basic “averaging” approach as an example. In this method, we:

The output of this process is a “perceptual hash”, a binary string (represented above as the greyscale bar) that encodes a reduced representation of the image. The above is a very simplistic approach, but is intended to be relatively robust against attempts to disguise the content. Unlike a traditional cryptographic hash, a perceptual hash does not need to match exactly; we can evaluate how close a given hash of an image is to any hash that we hold in a database of forbidden content.

In the context of FHE, we could add some small additional information that is only known to the sender and receiver, which will (under the application of the correct algorithm) change in some predictable way. An example of such a change (using the algorithm described above) is given below.

We could then append this information to the image prior to encryption, generating a hash that contains both the image and this information.

On delivery of an file containing the output of the encrypted algorithm, the recipient could then reconstruct the 2-dimensional version of the hash and thus prove that the correct algorithm has been applied. Because the computation happens under FHE and the actual content is invisible, this prevents the server from acting maliciously by falsifying the attestation.

This is of course quite a very simple approach; more complex approaches that cover a wider range of security considerations could be applied, but this is a basic illustration of the concept.

So, the output of this computation should ideally gives us one or more encrypted files; these contain values that indicate

We can then send the original encrypted image alongside the additional encrypted material onwards to the recipient. However, there’s a few more points that we’d need to consider to eliminate potential sources of weakness. We need to ensure that these are addressed.

Under FHE, it is possible to construct a system whereby the secret information needed to decrypt information can be spread across more than one party.

We can make use of this property to ensure that, while the recipient can participate in the decryption of the additional encrypted material (which is necessary for ensuring the attestation is valid), they alone cannot see the result of the analysis in the clear, and only the messaging service is capable of performing the final decryption step. This prevents the recipient from being able to manipulate the response to suggest the message is “clean”. In this suggested protocol, we do not need to trust either the user or their messaging client to be honest.

This also applies to the server; we can have the server perform a partial decryption on the attestation, which can be finalised and completely decrypted by the user’s device, thus preventing the server from seeing or manipulating the attestation.

We should, for the sake of completeness, also periodically rotate the secret keys held by both the server and the client device under this model. This guards against the possibility of the server being able to reconstruct the client key over time by introducing small errors into the hash, and then examining the response (a so-called “reaction attack”).

With respect to the security aspects, the above solution is based on technology and methods that currently exist. However, we now need to consider the practical complexities.

First of all, we cannot assume the existence of a “perfect” algorithm for detecting CSAM. This would be an algorithm that perfectly detects all such material even under attempts to disguise the image contents, such as changing the image aspect ratio, trimming the image, or introducing specially-crafted noise to produce incorrect outputs from the algorithm. Such an algorithm would also not generate false positives, where legal material (including legal adult material) would be incorrectly identified as CSAM.

In reality, scanning algorithms intended to detect CSAM (including those proposed for use in client-side scanning) are known to produce both false negatives and false positives, and despite efforts to overcome attacks there are still known ways of evading these technologies. This includes attacks against the “perceptual hashing” techniques used to detect instances of CSAM that are already known to the authorities, as well as techniques used to detect novel CSAM such as artificial intelligence networks trained to detect new images.

However, by far the most significant practical limitation thus far lies in the speed at which we can execute algorithms under FHE.

Such a system would be required to work at least at a national scale. As of 2017, WhatsApp users were sending over 4.5 Bn images per day, and that number is certain to have grown since then. Analysing the sheer number of images sent over WhatsApp in a country the size of the UK would already be difficult even if the images were not encrypted; doing so with a technology that is a million times slower when run on a typical CPU than unencrypted computing would be a non-starter.

In fact, what we’re proposing here isn’t exactly new; fully homomorphic encryption has already been suggested by GCHQ as a mechanism for securely implementing approaches to CSAM detection. Despite the notional capabilities of the technology, the conclusion then was that the computational cost of FHE was simply too high.

Of course, there’s other ways of making this both faster and less intrusive. For starters, if we can reduce the number of messages we want to scan, then we could further and significantly reduce the total compute required. This might involve a “simple” solution, such as randomly checking only a handful of messages. While this could at best only catch a sub-sample of abuse images, the knowledge that the system performs random checks might be enough to deter users from sending CSAM over the service.

Alternatively, we could apply a more complex heuristic, such as checking messages sent by users exhibiting behaviours or patterns of communication that might be associated with CSAM networks.

These approaches certainly help, but even random sampling is going to require a massive improvement in FHE throughput. So, if we’re to be able to implement a more ideal solution than client-side scanning, we need FHE to be faster. That’s where we at Optalysys come in.

Ultimately, the technical and social implementation of such a system is a broader subject than we could possibly address in a single article. However, in light of ongoing developments in the FHE space, it’s time to start thinking beyond the blunt instrument of client-side scanning as a solution to this problem.

News

© 2024 All rights reserved Optalysys Ltd

Subscribe

Sign up with your email address to receive news and updates from Optalysys.