Self-driving vehicles and Fourier-optical computing: A way forward?

Over the past half year at Optalysys, we’ve been testing the prototype of our novel Fourier-optical processor technology in a broad range of applications.

At the core of our concept is the fusion of silicon photonics with free-space optics, a technique that aims to couple the incredible computational capabilities of Fourier optics to the immense data throughput that can be achieved with modern integrated photonic components.

Thus far, every time we have put our technology to the test in a different application, it has yielded remarkably tractable results that have allowed us to successfully apply the system across radically different tasks. The application space might be vast, but this model of information processing has proven versatile enough to tackle everything we’ve thrown at it. While our previous work usually focuses (often in great detail) on whether we can perform specific operations, it’s also worth looking at the broader picture and considering what a dramatic shift in computational efficiency might mean for the world around us.

We start by considering one of the most challenging applications for high-performance computing: Self-driving vehicles.

Key challenges for self-driving vehicles

Self-driving vehicles as a technology offer us a revolutionary future. We’ve all heard of the benefits; widespread adoption of SDVs could give us a world which is safer, more convenient and more environmentally friendly. However, achieving that future is reliant on cracking three key problems:

- Giving self-driving vehicles the ability to accurately detect the world around them.

- Packing enough computing capability into these vehicles so that they can make sense of what they see and make intelligent decisions.

- Doing all of the above well enough that self-driving vehicles are vastly safer than human drivers.

Comparison with human drivers

Most self-driving vehicles bristle with sensors which provide different types of sensory information. These include visual sensors such as cameras, range-finding technologies such as radar and ultrasound, and also a relatively new technology known as LIDAR (LIght Detection and Ranging), which can generate highly accurate depth information, dense enough that the distinct shape of objects can be obtained.

Autonomous systems never get tired, aren’t easily distracted and have a 360 degree field of vision. Compare this with humans, where our eyes only capture a 140 degree cone of vision, and tiredness and inattentiveness are real dangers. Yet there’s a paradox here; despite our disadvantages, most humans can drive acceptably well, but SDVs have yet to achieve level 5 driving safety and thus still legally require human supervision.

Clearly something else is going on here; self driving cars almost certainly have an advantage over humans when it comes to detection. Even allowing for situations in which individual systems might struggle (such as LIDAR or cameras in heavy fog), human eyesight faces similar problems while the vehicle will almost always have a redundancy such as radar to fall back on. The answer to this paradox therefore lies in the one place where humanity still has an advantage: once you have that information, what do you do with it? And is it possible to do more with less?

The paradigm divergence in self-driving vehicles

To answer this, we have to look at how the future of the field is shaping up. There are currently two broad methods as to how sensor data is used in self driving vehicles; the “end-to end” or “behavioural reflex” approach, and the “mediated perception” approach.

While directed towards the same end (a car that can safely navigate its environment), these two methods are radically different to one another.

End-to-end self-driving

The end-to-end method is the closest to the abstract idea of “learning” to drive, in which a single deep neural network learns a direct mapping from sensor input data to an output that safely drives the vehicle. This can be surprisingly effective even when performed using limited data; there are relatively few outputs for the network, and a huge amount of the information that surrounds us is irrelevant to the actual task of driving.

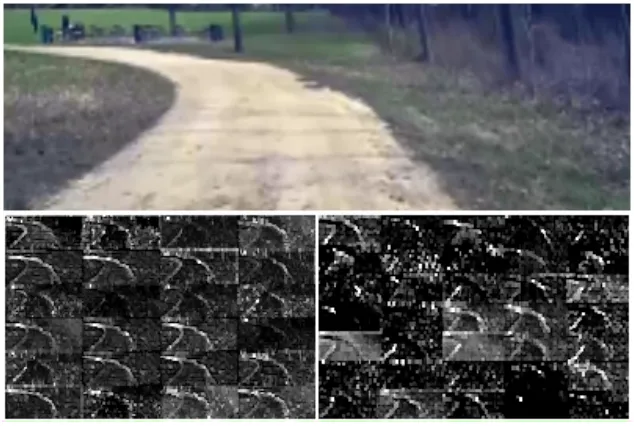

An unpaved road and the first and second layer feature maps extracted from an end-to-end self-driving network. The network was not explicitly trained to recognise features such as the edges of the road; it learned that these features were important solely from the angle of a steering wheel controlled by a human. From the NVIDIA report on end to end learning for self driving cars.

In the abstract sense, an end-to-end system will bring a vehicle to a halt at a stop sign because it has learned to do so from experience. It has seen many examples of stop signs and how to react to them in its training data, and thus can infer this behaviour to unseen stop signs. Somewhere in the network is an artificial neural pathway that stops the car when inputs corresponding to a stop sign enter the system.

However, this comes at a cost; the inner workings of such systems are opaque and hard to scrutinise, and it is impossible to guarantee that there aren’t edge cases that could cause the system to completely fail. Despite the relatively lightweight networks required, training an end to end network therefore requires training data and demonstrable performance that is sufficiently comprehensive to the task of driving under all conditions.

This is the primary challenge to the end-to-end approach: if we require a single network to be responsible for the entire driving task, this requires lots of data, and it must be must also be evenly distributed during training. Neural networks rely on independent, identically distributed input data and will therefore forget concepts they learned in the past if they do not constantly see examples of them during re-training.

To put this more plainly, if an autonomous vehicle was performing very well, but had a particular weakness with stop signs, supplying the network with stop sign examples only would cause catastrophic forgetting of all the other facets of driving that it had learned. To fix the problem we would need to instead create many examples of what to do at stop signs and randomly place them in the existing dataset and retrain on that. The downside of all this is that every time a new weakness is found in how the vehicle drives, the dataset grows, and the whole set must be used for re-training. This comes at an immense cost, both in terms of time and electrical energy.

Figuring out where exactly an appropriately comprehensive level of data lies is not easy, and it may be the case that an end-to-end approach will ultimately never be acceptable (at least from a regulatory standpoint) without some radical developments in AI techniques.

The mediated perception approach

By contrast, the mediated perception approach explicitly breaks driving down into rules which can be obeyed based on a semantic understanding of the world around us. A mediated perception system will halt at a stop sign because a network dedicated to detecting stop indicators passes this information to a more conventional computing process in which the rules “you have to stop at stop signs” are explicitly stated. This allows the system heuristics to be explicitly engineered, and if problems in the system are detected, then individual modules can be independently updated.

This breakdown of information, first into meaning and then into action, makes it much easier to identify and control the “thought process” of a vehicle engaged in a driving task, meaning that it is more transparent in how decisions were made, and in legal situations, engineers can point to the logical decision making process. But it comes at a considerably greater computational cost. The most advanced on-the-road implementation of such a system, Tesla Autopilot, runs 48 different networks dedicated to separate semantic tasks.

The effect of this breakdown and how it relates to the way the vehicle perceives the world can be seen in the following video, which demonstrates how mediated perception works in practice. In fact, at the start of the video, we can even see how the system detects and reacts to the stop sign example we described above. The sign itself is detected (indicated by the bounding box around it), and because the controlling system has a semantic understanding of what this means, it is able to mark the associated road marking with a red line!

Autopilot augmented view from the forward facing camera on a Tesla vehicle, showing a real-world example of how the semantic segmentation approach allows the vehicle to construct an understanding of how it relates to the world around it.

Autopilot isn’t designated as a fully autonomous system. Officially, it’s a highly advanced driver assistance suite that falls into the level 2 category for autonomous vehicles, which requires full driver supervision. Nevertheless, Tesla makes no secret of the fact that Autopilot is intended to eventually reach level 5, and are designing their onboard hardware accordingly. If self driving vehicles are to reach this level, the mediated perception approach looks to be the more likely route, which brings us back to our main point:

This is going to require a lot of computing. Can we do better than what is currently on offer?

Optical computing for self-driving vehicles

Large-scale deep learning techniques are a particularly difficult thing to apply in mobile and edge environments, and self-driving vehicles represent a convergence of different factors that make them especially difficult.

For starters, unlike many other mobile applications, there are human lives at stake at all times. This includes not only the occupants but everyone who might be around the vehicle, be they pedestrians or other road users. For us to trust them, self-driving vehicles have to reach the point where they get an immensely complex task right every single time in all possible conditions.

Not only that, but because vehicles can travel at significant speed, they have to both make and act upon those decisions in a fraction of a second. This prevents much of the computation being offloaded to remote datacentres; not only is the latency and bandwidth too high, even a brief communications failure could have severe consequences.

That’s not to say that there aren’t useful things to be done with networking; allowing vehicles to talk to one another (as well as to other road safety systems) has the potential to massively improve safety, but for the most part the core self-driving calculations have to be performed locally.

This in turn raises the issue of electrical power. Running multiple convolutional networks in parallel as part of a mediated perception approach requires significant amounts of energy, even on electronic systems designed for the task. Autonomous electric vehicles are expected to be the future of transport, which puts computational power and vehicle range in direct competition.

Convolution-based approaches are central to many of the networks used in both the end-to-end approach (such as the PilotNet network developed by NVIDIA) and in mediated perception. Indeed, a key part of mediated perception lies in image segmentation and classification; in the video above, we can see the effects of these networks as they draw bounding boxes around different aspects of the road environment, allowing the car to construct an internal representation of itself relative to its surroundings.

So to sum up, if self driving vehicles are going to be a reality, we need computing systems which can process vast amounts of data, mostly through convolutional techniques, with incredibly low latency and power consumption.

Fourier-optical computing offers the perfect solution

We’ve previously described how our Fourier-optical computing technology can be applied to convolution-based tasks in deep learning, a use-model that has some significant advantages over even the best conventional electronics. Indeed, the technology itself was designed from the ground-up to achieve superior performance in convolutional machine learning tasks. Our approach discards the higher spatial resolution, slower operating speeds and bulky components typical to classical Fourier optics in favour of a resolution much closer to the size of the kernels used by a CNN, all in a much smaller integrated form factor that works at the significantly higher clock speeds provided by the switch to silicon photonics. Because the actual calculations happen in the optical regime, both electrical power consumption and thermal emission are also greatly reduced.

Perhaps best of all, at least from the shorter-term perspective of cracking the problem for the first time, is that the practical gains that can be had from the use of an optical computing system are generic; they come from vastly enhanced computational performance and efficiency, rather than the design of highly specialised electronic silicon. This would make developing or altering models and methods much more flexible, allowing for greater experimentation and innovation over shorter development cycles in much the same way that GPU computing drove a boom in deep learning complexity.

Next steps

The self-driving vehicle problem doesn’t exist in isolation. The AI techniques involved in making autonomous vehicles work are themselves widely applicable to other scenarios. While we can accelerate these tools directly with our optical system, we’re also interested in pushing things forward.

One piece of research that caught our eye in this respect is the use of neural networks for the evaluation of formal logic statements. We’re particularly interested in the idea that images of objects can also be interpreted as logical symbols. With that in mind, one of the next things we’re turning our eye towards in the AI space is blending logic evaluation with autonomous feature extraction with the intention of creating a learnable and interrogatable semantic model for the self-driving vehicle approach that conceptually fuses both the end-to-end and mediated perception approaches.

To the best of our knowledge, this hasn’t been proposed as an approach before, so our next steps are also intended to demonstrate that our optical system can be applied to unseen problems and used as part the development of entirely new methods. We’ll be explaining our concepts and approach to this problem in greater detail in our next article on the subject. For now, if you have questions about this or any of the other applications we’ve covered, you can drop us a message here.