Key security risks in Federated Learning

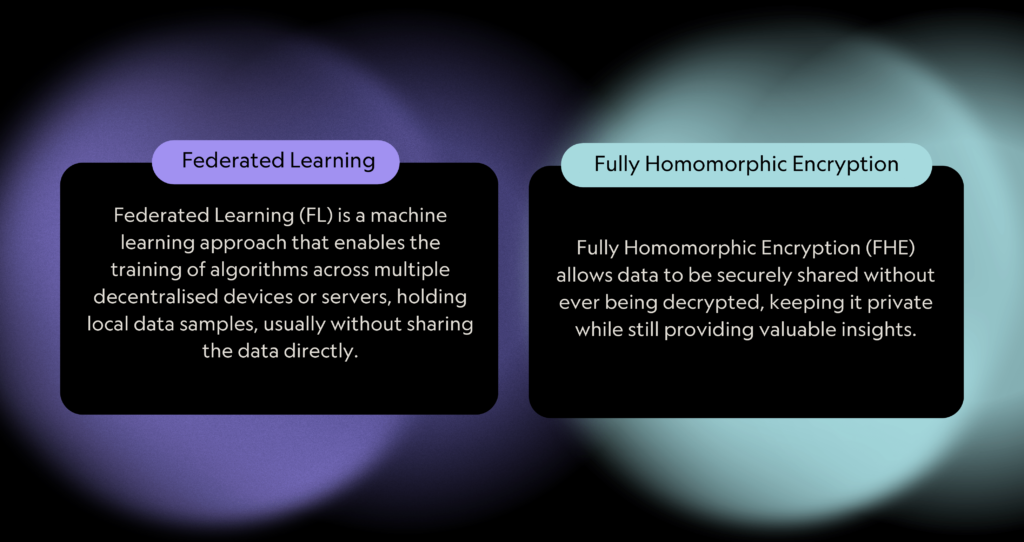

Federated Learning (FL) is a machine learning approach that enables the training of algorithms across multiple decentralised devices or servers, holding local data samples, usually without sharing the data directly. By keeping data local, FL helps address privacy concerns and regulatory requirements, making it appealing for industries handling sensitive data like finance, healthcare, and defence. However, doubts around trust remain, especially when considering the stringent demands for data security in these sectors.

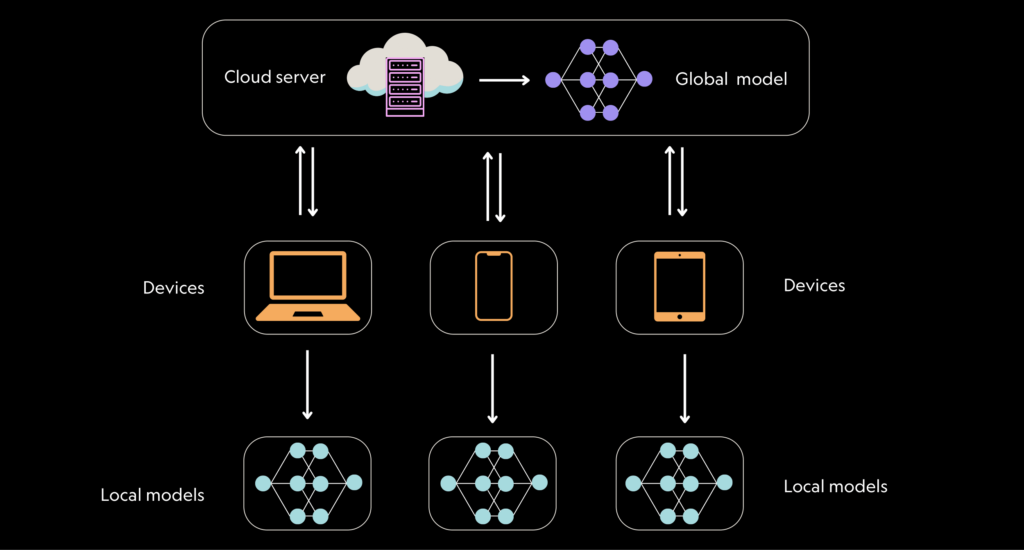

What is Federated Learning (FL)?

Federated Learning (FL) is a machine learning approach that enables the training of algorithms across multiple decentralised devices or servers, holding local data samples, usually without sharing the data directly. By keeping data local, FL helps address privacy concerns and regulatory requirements, making it appealing for industries handling sensitive data like finance, healthcare, and defence. However, doubts around trust remain, especially when considering the stringent demands for data security in these sectors.

Characteristics of Federated Learning

- Decentralised Data: Data usually remains distributed across devices or servers

- Collaborative Learning: Multiple parties contribute to improving a shared model without directly sharing their data

- Privacy Preservation: Only model updates, not raw data, are exchanged between participants and a central server

- Iterative Process: The model is refined through multiple rounds of local training and global aggregation

- Heterogeneous Data: FL can handle diverse and non-identically distributed data across participants

A loan decision example

Imagine that we are a technology provider specialising in loan application decision-making, with our solutions already deployed across multiple banks. To enhance model accuracy, we aim to implement FL across our client base. All necessary permissions, compliance approvals, and agreements have been secured, ensuring no personal data is shared inappropriately – every client has given their consent to participate.

This initiative will help improve loan decision-making and deliver better outcomes for customers, benefiting the entire industry. However, to ensure the security and privacy of sensitive data, we must address key concerns surrounding data integrity and protection.

Top 4 security risks in Federated Learning

1. Data leakage

Sensitive information can be inferred from model updates. For instance, in our loan decision system, updates may reveal approval patterns, such as favouring certain loan sizes or customer profiles, which competitors could exploit.

2. Protection of Intellectual Property (IP)

The IP embedded in ML models, such as proprietary criteria used to assess loan default risks, could be compromised if not properly protected.

3. Central aggregator vulnerability

In traditional FL setups, a central aggregator can access incremental model updates and derive insights into each participant’s data. For example, a central aggregator might infer that a specific bank focuses on high-value mortgage loans based on recurring model update patterns. This risk is addressed in decentralised approaches.

4. Model stealing

Participants may attempt to build their own global model using incremental updates they receive. For instance, a participant bank might seek to reconstruct a shared loan decision model to gain a competitive advantage without collaborating further.

Federated learning and Fully Homomorphic Encryption (FHE): a fully secure solution?

Looking back at our loan decision example, here are the key security questions to consider:

- Who has access to my data, particularly during model training?

- Who manages the shared data environment?

- What security measures are in place to protect my data?

- What vetting processes ensure data privacy and security?

- How is data loss prevented?

Who has access to my data? Data leaks

This is particularly important during FL model training. In loan decision-making, concerns around data confidentiality and leakage are particularly significant. Data leaks, such as exposing Personally Identifiable Information (PII), could compromise client privacy & trust and result in huge regulatory fines. Addressing these concerns is essential to build confidence and ensure secure, accurate outcomes.

FHE enables computations on encrypted data, ensuring sensitive information remains protected. With FHE, data is encrypted at all times, meaning even those managing the shared environment cannot access unencrypted data. This goes a long way in addressing concerns around data leakage and confidentiality.

Who has access to the model? Model data leakage

FL often involves sharing model updates among parties, raising concerns about model data leakage – where sensitive information could be inferred from these updates. In the context of a loan decision system, by looking at model weights, bad actors could infer PII data about clients.

Using FHE, model updates are encrypted, ensuring no single party can infer individual data points from shared updates. This helps protect against gradient inversion attacks, which aim to recover original inputs from model updates.

Who has access to the model? Protecting Intellectual Property (IP)

ML models represent proprietary knowledge, and model weights embody this IP. In FL, risks exist around extracting these weights or inferring sensitive details about model behaviour. FHE ensures that model weights remain encrypted, protecting the IP of model owners.

Alternatively, model owners may opt to host the model themselves and securely bring data to the model. However, this increases complexity in maintaining data security compliance and may put off some participants who do not want to share their data directly, unless they’re using technology like FHE.

Real-World Implications

Federated Learning holds great promise for improving model accuracy, but it continues to face significant security challenges, especially in data-sensitive industries. Concerns around intellectual property protection, data privacy, and potential data leakage are particularly critical in sectors that handle sensitive information. Real-world instances of data breaches and adversarial attacks underscore the urgent need for continued security.

The global federated learning market was valued at USD 133.1 million in 2023. By 2032, it is expected to grow to USD 311.4 million, reflecting a compound annual growth rate (CAGR) of 10.2% from 2023 to 2032 (Source). This huge growth highlights the increasing adoption of FL and need for FHE and other Privacy-Enhancing Technologies (PETs) to provide solutions to ensure the integrity of FL systems, particularly in data-sensitive industries.

At Optalysys, we accelerate Fully Homomorphic Encryption (FHE) with silicon photonics, pushing the boundaries of what’s possible in secure computing by enhancing speed, efficiency, and scalability.